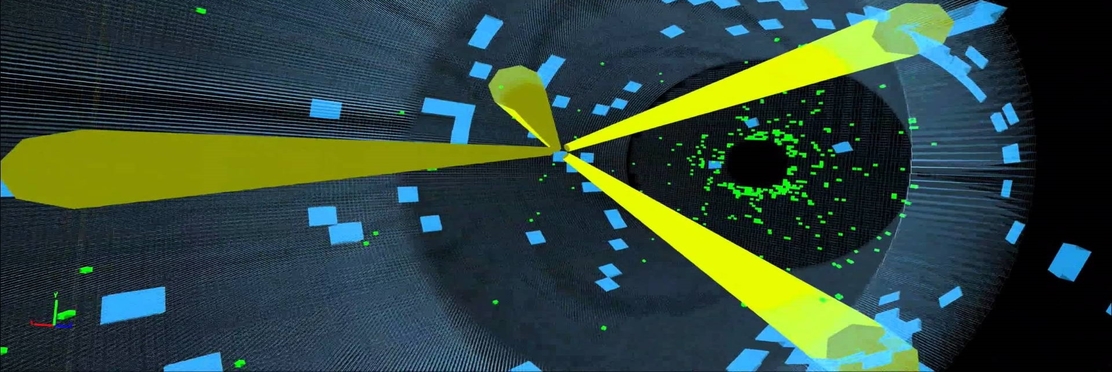

The high energy physics agenda for exploring new physics beyond the standard model requires the identification of rare and elusive signals amongst a huge number of background events. The data delivered by the Large Hadron Collider is reaching hundreds of petabytes and their reconstruction, classification and interpretation are extremely challenging tasks, one which HEP practitioners have always undertaken with the best computing tools available, including Machine Learning techniques. Neural Networks (NN) and Boosted Decision Trees (BDT) have been used in HEP for quite a long time, for instance in particle identification algorithms. The Deep Neural Neural Network (DNN) breaktrough brought the promise of manageability of big datasets even in the presence of symmetries and complex nonlinear dependences and is starting to be added to HEP’s toolbox.

Geant V

In 2014 our team was awarded a grant from Intel to establish a “Intel Parallel Computing Center” (IPCC). The IPCC aimed to include the SPRACE computing team in the R&D efforts necessary to adapt high-energy physics software tools to the modern computing architectures that support multi-threading, vectorization, and other parallel processing techniques to make data processing more cost-effective.

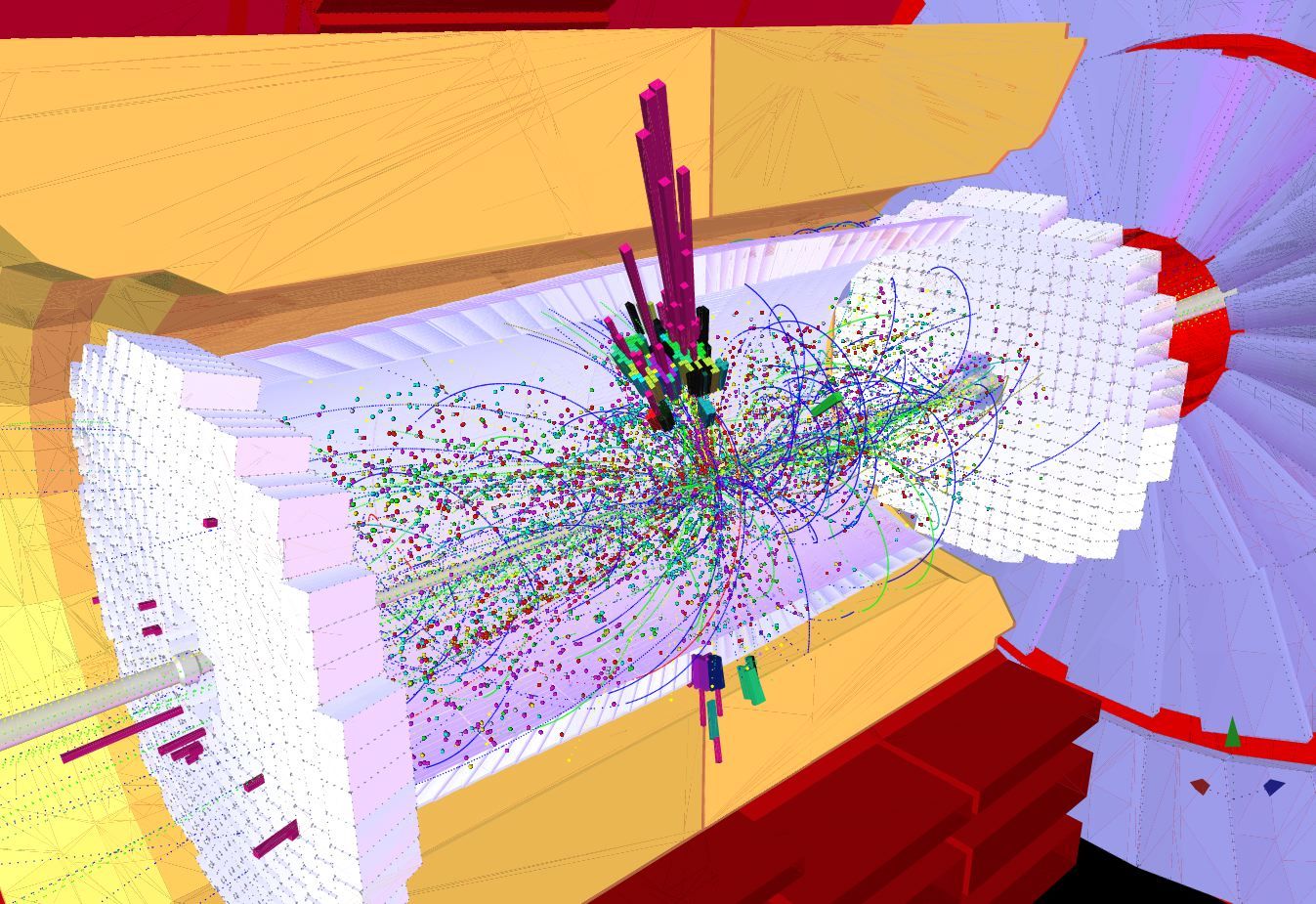

The main goal of the project was the development of Geant V, the next generation of the Geant simulation engine. The software simulates the interaction between radiation and matter and has numerous applications. Geant is used, for instance, at CERN to describe the interaction of the particles produced in the collisions with the detectors. The software is also employed in radiology and in studies of electronic circuits resistant to radiation.

Machine Learning for HEP

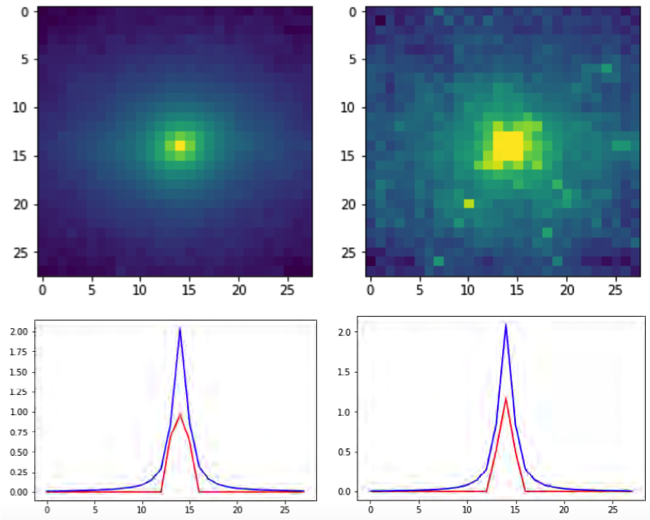

The Machine Learning for high energy physics (HEP) team of SPRACE tries to study and apply Machine Learning techniques to HEP problems, like event generation and particle identification. Generation of simulated proton collisions – particularly the modeling of the outgoing particles produced in the collision with the sensitive modules of the detector – is computationally expensive. Billions of simulated collisions are usually needed for physics studies at the LHC, and a single simulation takes around one minute in a modern CPU. We try to leverage generative models, like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), to see if we can do those simulations in a fraction of the time while retaining the full accuracy of other methods.

Kytos

Kytos is an open source platform for Software Defined Networks (SDN) developed by SPRACE. It is conceived to ease SDN controllers development and deployment and was motivated by gaps left by common SDN solutions. Moreover, Kytos has strong tights with a community view, so it is centered on the development of applications by its users. Thus, our intention is not only to build a new SDN solution, but also to build a community of developers around it, creating new applications that benefit from the SDN paradigm. It is the first SDN Platform focused on solving eScience network problems.

Kytos development was strongly supported by important academic network providers, such as ANSP (São Paulo), RNP (Brazil) and Amlight (Latin America), and was also supported by Huawei, the most important Chinese telecom company. Kytos and Amlight teams are working together to meet the LSST experiment network demands. For more information, visit the project website.